Networking configuration and Physical Requirements

Guide

Network design, setup and configuration are the first steps in running up a working cloud solution. Designing a good logical network will help the developers to go on plan and will reduce the chances of running into serious problems (which may cause the entire solution the be redesigned).

Coming to a working network solution requires you to have fully reviewed the Openstack platform deployment process or have done it before.

Starting with this book, as we read it through further, we couldn't come to its physical network plan (maybe it was obvious to the author anyway!). The second book however which is a complete guide for running a scalable Openstck solution with concentrating on "neutron" component, presented a clear physical network setup guide. As the goals and reasons of its network design is clearly pointed out in the first chapter, We will not repeat them here and will only point the important notes of it.

<- Our guide through this adventure

<- Our guide through this adventure

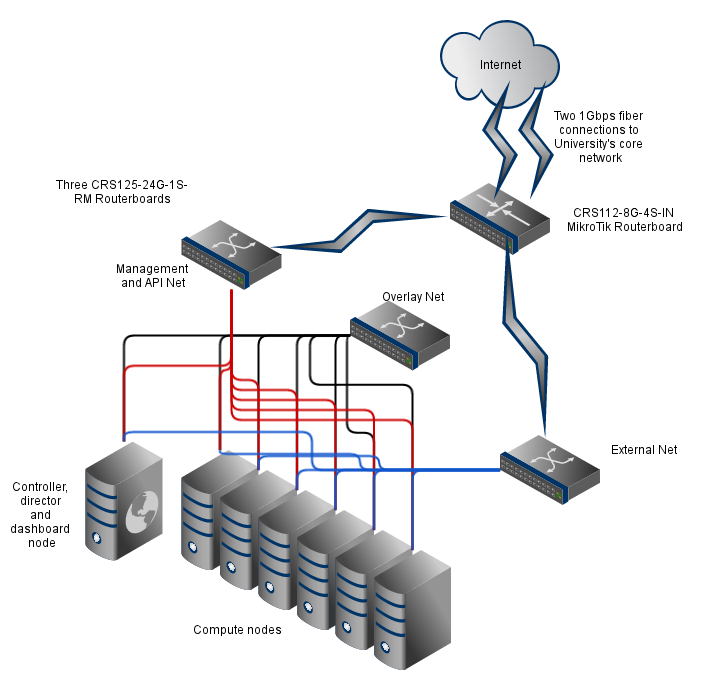

Physical Network Design

Openstack needs four logical networks for its deferent functionalities:

- API Network

- Internal Openstack Management Network

- Overlay Network

- VM's External Network

Suggesting to combine the first two, the books proposes setting up three physical networks, completely seperated from each other, though noting that we can merge these networks by using complicated VLANing techniques (which we wont).

Three physical networks require each node to have three Network Interfaces.

The final picure of our physical network design will be something like this:

We then need to connect the management and external networks to the internet using our switches.

We are planning these 3 networks for a 20-node COTS environment, so instead of using a single switch and VLANing, we will create 3 seperate physical networks using 3, 24-port networking switches and then using a smaller routerboard above the two of them (which we mentioned that they need internet connectivity) like this:

Hardware Selection

Considering the above network design and starting from scratch, building a network infrastructure requires a thorough planning.

The infrastructure will be located in a room with around 30-meter perimeter. We first provide a complete list of required hardware and devices and then elaborate on how we came to choose them.

| Title | Quantity | Description |

|---|---|---|

| Mikrotik CRS125-24-1S-RM Switch | 3X | To provide 3 separate physical networks |

| Mikrotik CRS-112-8G-4S Switch | 1X | To connect physical networks to the University core network and internet, using a fiber connection |

| Compatible SFP For mikrotik switch | 2X | We will connect 2 fiber cables to our edge CRS-112 Switch |

| Cat6a Shielded Patch panel , 24port | 3X | |

| Fiber Optic cabling | 2X | To connect to University infrastructure |

| A small 24 unit standard rack | 1X | To hold the networking devices |

| Cat6a SFTP Cabling | up to 3 rolls | This rolls will be used to provide internal network connections |

| Cat6a SFTP 0.3m Patch cord | 65X | Connection between patch panels and switches |

| Cat6a SFTP 2m patch cord | 25X | To connect servers to the pysical networks |

| Trunking and wall socketing | for a 25-metered environment | |

| Rackmount Power Modules | 2X | To provide power for rackmounted devices |

| Cable Management Modules | 3X |

- As we mentiond before, we have a COTS server environment consisting of 20 Special purpose Desktop Computers which we will spread in the dedicated room.

- The Servers have only one onboard network interface, so we provided each with two 1G PCI-e Network Interface cards.

- We found "DLink DGE-560T" the most suitable choice

- Two port server NICs are great but costly, so we ignored them

- The selected network wiring (which is Shielded 1Gps Cat6a) is because high amount of noise in the environment and the distance between servers and networking devices, Altough any reduction in the quality of the network (for exmple using UTP cabling or cat5 cabling) will result in tremendous reduction in costs, but the decrease in quality of service will also be noticable.

- The connection between our networks and Internet will be established using a pair of fiber-optic cables.

- The choice of Mikrotik switches was because of their easy to use interface and Layer 3 capabilities and also their lower price. A similar package of Cisco devices would probably cost more than 2 times; and cheaper solutions( like Dlink Switches) do not provide Layer 3 NAT or Bandwitdth control)

- The management network switch will also be a NAT provider to allow our nodes to connect to internet for the porpose of package management and installation. It will also be used to provide port forwarding to the controller node in order to allow connection to Horizon and SSH connectivity from outside.

The three physical networks are all defined by color codes. All wiring in the system are based on these:

- Management Network : GREEN

- Overlay Network: BLUE

- External Network : ORANGE

Physical Switch Connections

- GREEN Switch

- interfaces 1-10 > physical PCs( first Interface )

- interface 24 > Edge Router ( interface GE2)

BLUE Switch

interfaces 1-10 > Physical PCs ( Second Interface )

interface 24 > Edge Router ( interface GE1)

ORANGE Switch

interfaces 1-10 > Physical PCs ( Third Interface)

interfaces 23-24 > Edge Router (interface GE3-GE4)

Edge Router

interface GE5 > BLUE Swith (interface 24)

interface GE6 > GREEN Switch (interface 24)

interfaces GE7-GE8 > ORANGE Switch (interface 23-24)

interfaces SFP2-3 > Faculty core switch (interfaces SFP7-8)

Switch Configuration

- GREEN Switch

- BLUE Switch

- ORANGE Switch

- Edge Router

Network configuration (routers):

We are following the network scenario where there are three separate open stack infrastructure networks. We have green, blue and orange networks. We assign a router to each network, so that now we have three routers and to connect them together we use another micro router. All routers can act like switch and router. We named them as followed:

Edge router acts like a half switch – half router, it bridges the optic link to gig-ethers for other routers.

Green router is used to prepare NAT for 10.254.254.0/24 network. It has local DNS-server and static DNS-record which contains the name of our nodes. DHCP-server is disabled. It has three rules for DST-NAT in order to access control node over the router. Following ports are passed through the router to controller node: 22, 80, 443, 6080

Blue router acts just like a switch and nothing is configured on it.

Orange router acts like a router for open stack compute node.

All routers are set to draw graphs for illustrating memory, cpu, disk and traffic usage.