Nova System Architecture

Nova is comprised of several server processes, each performing different functions. User interaction with Nova is via a REST API, while other internal Nova components communicate via an RPC message passing mechanism. RPC messaging is done via the oslo.messaging library, an abstraction on top of message queues. Most of the major nova components can be run on multiple servers, and have a manager that is listening for RPC messages. The one major exception is nova-compute, where a single process runs on the hypervisor it is managing (except when using the VMware or Ironic drivers).

It is to be noted that Nova deployments can be horizontally expanded using the deployment sharding concept called cells. However, in the current stable version of openstack, that is, at the time of writing this, a non-cells Nova deployment (both non-cells v1 & v2) is under consideration.

Below a helpful figure of the Nova system architecture can be found which includes the key components of a typical non-cells Nova deployment:

According to the given figure, we can conclude that Nova is comprised of 4 main components:

- nova-api

- nova-conductor

- nova-scheduler

- nova-compute

as well as 3 other trivial components, namely:

- nova-cert

- nova-consoleauth

- nova-novncproxy

Also, previously, the following components were in Nova:

- nova-network

- nova-api-metadata

But now, these two components are considered to be legacy and are replaced by Neutron. The following figure summarizes the aforementioned points:

Nova Components Overview

The blue color signifies the element as being a fundamental nova component while the gray and red suggests the component to be legacy, and eventually the light green marks the trivial nova components.

Few Points:

Although the elements in light green are considered to be trivial, that is not part of the main Nova components, they more or less have an important role. nova-cert, for example, is a server daemon that serves the Nova Cert service for x509 certificates and is used to generate certificates for euca-bundle-image, and is only needed for EC2 API. for more, see here.

As previously stated, nova internally uses RPC to send/receive messages. Those processes who insert messages to the distributed message queue are called invoker, while the receiveing processes are called worker who take messages from the distributed message queue.

The API servers process REST requests, which typically involve database reads/writes, optionally sending RPC messages to other Nova services, and generating responses to the REST calls.

Nova uses a central database that is logically shared between all components. However, to aid upgrade, the DB is accessed through an object layer that ensures an upgraded control plane can still communicate with a nova-compute running the previous release. To make this possible nova-compute proxies DB requests over RPC to a central manager called nova-conductor.

Nova Components Responsibility

Now we will walk through all nova components one by one and explain it in detail. We will start this with evaluating main components untill we reach a general overview of what's going on in Nova!

1. Nova-API

An HTTP Web Service

Accepts & Responds to User’s Compute API calls

Supports OpenStack Compute API, Amazon EC2 API, Admin API.

Initiates Orchestration Activities.

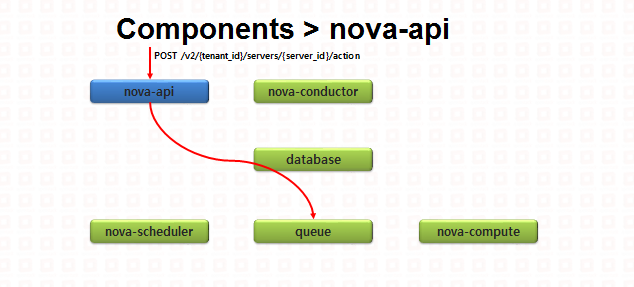

The nova-api gets a request and pass it down to the queue to be processed. So, the following scenario would happen:

Below, a sample POST request to the nova-api is specified.

POST /v2/{tenant_id}/servers

Request Body

{

"server": {

"name": "server-test-1",

"imageRef": "b5660a6e-4b46-4be3-9707-6b47221b454f",

"flavorRef": "2",

"max_count": 1,

"min_count": 1,

"networks": [

{

"uuid": "d32019d3-bc6e-4319-9c1d-6722fc136a22"

}

],

"security_groups": [

{

"name": "default"

},

{

"name": "another-secgroup-name"

}

]

}

}

2. Nova-Conductor

It acts as a Database Proxy, that is an intermediary between the compute node and the database.

Why do we need a proxy?

- Because compute nodes are the least trusted of the services in OpenStack and they host tenant instances.

The following figure shows how nova-conductor works:

It is to be noted that nova-conductor would:

- restrict services to executing with parameters, prevent directly accessing or modifying

- complicates fine-grained access control and audit data access, focus on improving security

3. Nova-Compute

A worker Daemon

- Creates and terminates virtual machines through hypervisor APIs.

Note:

Nova supports multiple hypervisors. This is because it has an abstraction layer, Driver. This is shown in the following figure:

Hypervisor Types:

- Type-1: bare metal hypervisor.

Type-2: hosted hypervisor.

General Scenario:

A general scenario of how a compute node is accessed is shown in the following figure. Since nova-compute receives messages, we know it as a worker daemon (i.e not invoker).

First off, a request to create a VM is received by the nova-api. The nova-api then pass it down to the distributed message queue. The scheduler, which will be discussed below, take the request from the queue, process it and then selects a nova-compute node to run the VM on that node. The scheduler would encapsulate this selection and put it in the queue and it will finds its way through to the designated compute-node. The specified VM will then be run on the hypervisor of that node.

4. Nova-Scheduler

The scheduler, takes VM requests from the queue and determine on which compute node host it. This is shown in the figure below:

The big question is how the scheduler would do that?

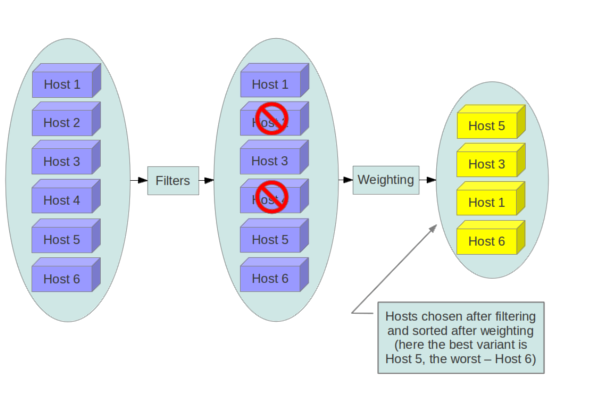

It is a two step process, namely Filtering and Weighing

FilterScheduler is the default scheduler in nova-scheduler which supports both filtering and weighing.

The following figure demonstrates how the nova-scheduler works internally:

Filtering:

Filtering of the hosts would occur based on the filtering policy, which can be any of the following options or any other personalized option:

1- RetryFilter

Prevents the scheduler from selecting the host that fails to respond.

2- AvailabilityZoneFilter

Filters hosts by availability zone.

3- RamFilter

Select host that have sufficient RAM. It is to be noted that overcommitments are possible by configuration.

4- ComputeFilter

Passes all hosts that are operational and enabled.

5- ComputeCapabilitiesFilter

Satisfy the extra specs associated with the instance type

6 - ImagePropertiesFilter

Filters hosts based on the properties defined on the instance's image.

- lots of other filters, see here for more.

Note:

All of the above mentioned categories of filtering are defined as filter classes under the directory /nova/scheduler/filters in Openstack source code. All these classes has a method named host_passes which, as the name suggests, pass the host through the filter. The host_passes method for RamFilter class, for example, is as follows:

def host_passes(self, host_state, filter_properties):

"""Only return hosts with sufficient available RAM."""

instance_type = filter_properties.get('instance_type')

requested_ram = instance_type['memory_mb']

free_ram_mb = host_state.free_ram_mb

total_usable_ram_mb = host_state.total_usable_ram_mb

ram_allocation_ratio = self._get_ram_allocation_ratio(host_state,

filter_properties)

memory_mb_limit = total_usable_ram_mb * ram_allocation_ratio

used_ram_mb = total_usable_ram_mb - free_ram_mb

usable_ram = memory_mb_limit - used_ram_mb

if not usable_ram >= requested_ram:

LOG.debug(_("%(host_state)s does not have %(requested_ram)s MB "

"usable ram, it only has %(usable_ram)s MB usable ram."),

{'host_state': host_state,

'requested_ram': requested_ram,

'usable_ram': usable_ram})

return False

# save oversubscription limit for compute node to test against:

host_state.limits['memory_mb'] = memory_mb_limit

return True

Weighing:

Scheduler applies cost functions on each host and calculates the weight and the least weighted host wins.

The scheduler manager sends an RPC call to the compute manager of the selected hosts. The compute manager receives it from the queue and run its create_instance method and we get a running VM instance. The following figure demonstrates the process:

A Walkthrough Over the Complete Scenario

The complete scenario of what's going on in Nova components internally when a request receives at nova-api is shown in the following figure:

First of all, Horizon/CLI receives a request to create a new instance with the specified properties. The request will be authenticated by the keystone and the requester will receive an Auth Token as well as a service catalog. The request, if authenticated, will then be given to nova-api with its received Auth Token embedded to the request. Because HTTP is stateless, nova-api would check authentication of the request by checking the Auth Token against the keystone so that an intruder can not connect to other internal Nova components without a valid Auth Token. The nova-api would then access the shared logical DB to read/write some necessary information and eventually, would put the request on the queue, that is nova-api would invoke nova-scheduler worker process. The nova-scheduler would then take the request from the queue, process it, access the necessary information from DB to decide the compute node and selects an appropriate compute node for the instance to run on based on the fetched information and the specified filteringScheduler. The nova-scheduler would then invoke the selected nova-compute worker process by sending an RPC message to the compute manager of the selected compute node. The compute node needs to register VM information on the shared logical DB before actually allocating those resources. Because compute node is the least trusted component, it will access the DB only through a database proxy called conductor. Right after the VM information has been registered on the DB, nova-compute would allocate the specified resources by contacting external components such as Glance, Cinder, Neutron and it will then contact its Hypervisor to run the instance and there it goes.

.